Leave us your email address and be the first to receive a notification when Robin posts a new blog.

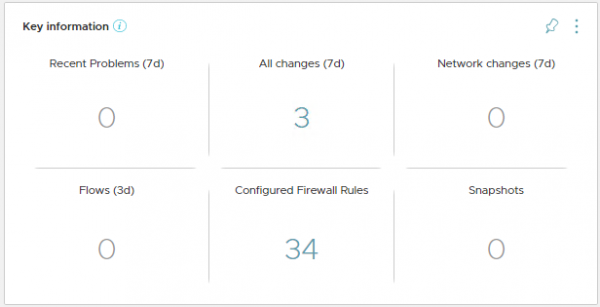

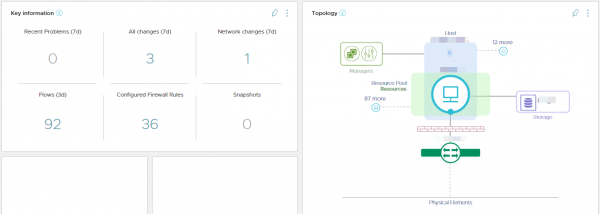

Our first guess was that something went wrong in the flows that are processed in vRNI. But also after looking through the logs together with a VMware engineer, we could not find a solution. In order to keep the implementation going, we created a work-around by querying the logs from vRNI using the IP-address, instead of the VM name. In the meantime we could continue our search. As shown in the screenshot above, vRNI didn’t repost any flows based on the VM name. But we could not find the answer in vRNI.

While investigating the flows in vRNI, we were able to build the first set of firewall rules for the applications. Including the one containing several VM’s, in which we could only see the flows based on the IP-address. Normally when we add rules to the NSX Distributed Firewall, we add some sort of validation rule. This in order to make sure the rule is working correctly, before we start blocking traffic.

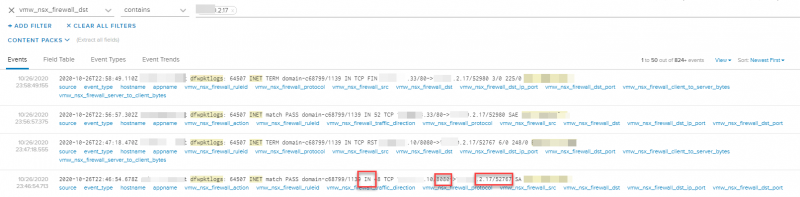

In the logs from the Distributed Firewall we found some entries that did not relate to normal traffic flows.

While filtering on the destination IP-address xx.xx.2.17, we found traffic IN-bound towards this IP-address that looked like return traffic from the VM. That traffic was showing in the logs since it did not hit the firewall rules we created for this application.

The above example of the first event, shows you that the traffic from xx.xx.xx.33 on source port 80 is send towards xx.xx.2.17 on port 52980. As we suspected this was the return traffic for a TCP session that our VM with IP-address xx.xx.2.17 had setup towards xx.xx.xx.33 on port 80. Raising the question on why this traffic wasn’t allowed by the allocated rules for this VM? Since all VM’s, that did not show any flows in vRNI on the VN name, had the same issue, we suspected that something was wrong with the VM. But what?

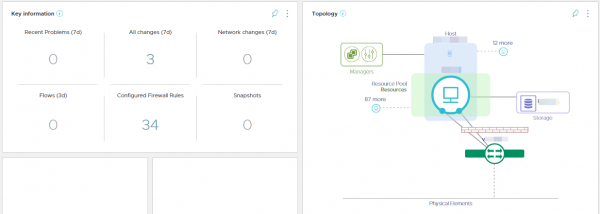

The answer to this question can be deducted from the VM overview in vRNI. We found that the difference, between the ‘working’ VM’s and the VM’s without any flows by VM name, was that they each had two virtual network interfaces. So, we asked the customer why these VM’s had been configured with two network interfaces. It turned out that the virtual servers were configured with a NIC team. Which was something that we did not consider, but it did end our search.

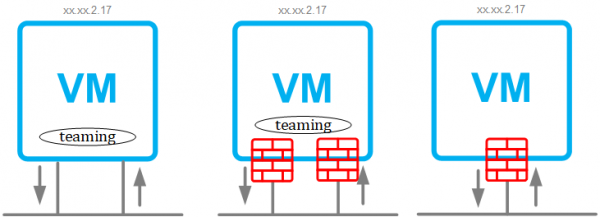

This issue can be explained by using the three VM’s in the image above. The first VM on the left has two interfaces with a teaming software in the VM. Based on the teaming it can be configured to use one network interface for sending and one for receiving traffic. Although this is not an optimal solution, there might be some rare use cases for it and it does work.

When we add the NSX Distributed Firewall to the environment, each virtual network interface gets its own firewall. If we still use the teaming software in the VM, then the traffic is being send out through one firewall and is received on another firewall (asynchronous traffic). Therefore the returning traffic is dropped in the second firewall since it isn’t matched against an established session or connection.

If the second interface and teaming is removed from the VM, the returning traffic is allowed, since it is matched on an established session or connection.

After changing the VM back to a single virtual network interface, the Distributed Firewall started working as expected. And most of all, the flows were visible in vRNI.

In conclusion, sometimes the answer to a question can be very easy. And it can be something you didn’t consider in the first place. Hopefully this blog post will make it easier for you to spot these types of issues, and saves you the time it took us to figure this one out.

Questions, Remarks & Comments

If you have any questions and need more clarification, we are more than happy to dig deeper. Any comments are also appreciated. You can either post it online or send it directly to the author, it’s your choice.

LinkedIn

LinkedIn

Twitter

Twitter